All Categories

Featured

Table of Contents

Amazon currently commonly asks interviewees to code in an online paper data. Currently that you know what concerns to anticipate, allow's concentrate on just how to prepare.

Below is our four-step prep prepare for Amazon data researcher prospects. If you're getting ready for more companies than just Amazon, then check our general data science interview preparation overview. Most candidates fall short to do this. However before spending 10s of hours preparing for an interview at Amazon, you must take a while to see to it it's really the ideal business for you.

FAANG roles in AI and ML are among the most sought-after careers in tech, making targeted preparation essential - ML Engineer Interview Strategies and Career Prep. Programs like Prep Courses for AI ML Roles at FAANG focus on role-specific challenges to help participants enhance their career readiness. From neural network applications to resume optimization for FAANG roles, these courses provide actionable strategies to achieve their career aspirations. With additional support from peer collaboration networks, learners are fully prepared for success

, which, although it's made around software application advancement, should offer you a concept of what they're looking out for.

Note that in the onsite rounds you'll likely have to code on a white boards without being able to perform it, so exercise creating with troubles on paper. Offers free training courses around initial and intermediate maker discovering, as well as information cleaning, information visualization, SQL, and others.

FAANG interview preparation is a game-changer for tech professionals - FAANG Interview Prep Reviews. Resources like Interview Kickstart Course Reviews offer structured pathways to refine technical skills. With a focus on mock interviews, these courses equip learners for career success

Mock Data Science Interview

Make certain you have at least one story or example for every of the principles, from a vast array of settings and tasks. Lastly, an excellent method to practice all of these different kinds of questions is to interview on your own out loud. This may seem strange, however it will dramatically enhance the method you communicate your answers during an interview.

Trust us, it functions. Exercising on your own will only take you up until now. One of the main challenges of information researcher meetings at Amazon is connecting your different responses in a manner that's simple to understand. Therefore, we highly recommend experimenting a peer interviewing you. If feasible, a terrific place to start is to exercise with friends.

However, be warned, as you may confront the following troubles It's tough to know if the responses you obtain is accurate. They're unlikely to have expert understanding of interviews at your target business. On peer systems, individuals often lose your time by disappointing up. For these reasons, numerous candidates skip peer simulated meetings and go right to simulated meetings with a specialist.

Building Career-specific Data Science Interview Skills

That's an ROI of 100x!.

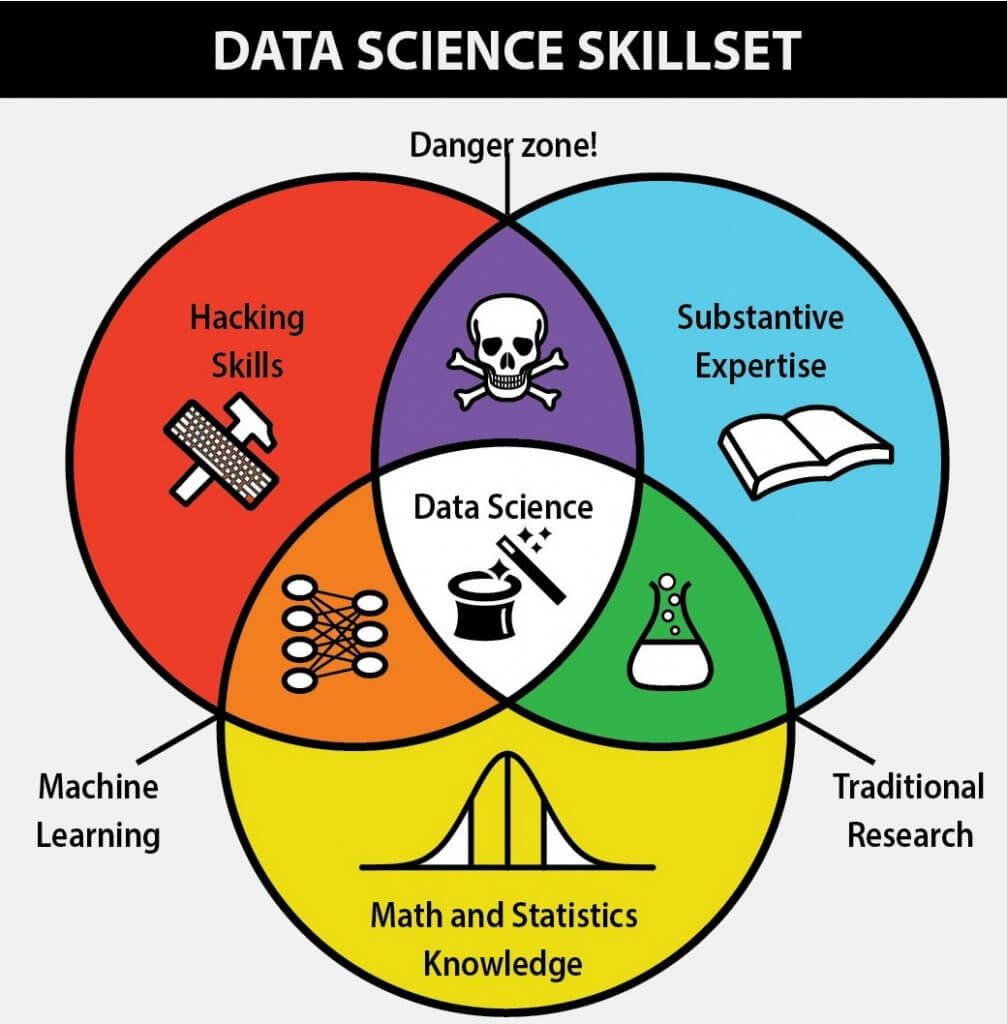

Data Scientific research is quite a big and diverse field. Because of this, it is truly tough to be a jack of all professions. Generally, Data Science would concentrate on maths, computer technology and domain competence. While I will quickly cover some computer technology principles, the mass of this blog site will mostly cover the mathematical essentials one might either require to brush up on (or even take an entire program).

While I comprehend many of you reading this are extra math heavy by nature, realize the bulk of information science (attempt I state 80%+) is gathering, cleaning and processing data into a useful kind. Python and R are the most popular ones in the Information Scientific research room. I have additionally come throughout C/C++, Java and Scala.

How To Optimize Machine Learning Models In Interviews

Usual Python libraries of selection are matplotlib, numpy, pandas and scikit-learn. It prevails to see the bulk of the data researchers remaining in a couple of camps: Mathematicians and Database Architects. If you are the second one, the blog won't aid you much (YOU ARE ALREADY OUTSTANDING!). If you are amongst the initial team (like me), opportunities are you really feel that composing a dual nested SQL query is an utter problem.

This might either be gathering sensing unit data, analyzing web sites or accomplishing surveys. After collecting the information, it needs to be changed right into a usable type (e.g. key-value shop in JSON Lines files). When the information is gathered and placed in a usable layout, it is essential to execute some data high quality checks.

Achieving Excellence In Data Science Interviews

In cases of fraudulence, it is really typical to have heavy course imbalance (e.g. only 2% of the dataset is real fraud). Such information is essential to determine on the appropriate options for attribute engineering, modelling and model evaluation. For more details, inspect my blog on Fraudulence Discovery Under Extreme Class Imbalance.

Usual univariate evaluation of choice is the pie chart. In bivariate evaluation, each function is compared to other features in the dataset. This would certainly include connection matrix, co-variance matrix or my individual fave, the scatter matrix. Scatter matrices enable us to locate concealed patterns such as- features that must be crafted with each other- features that might need to be removed to avoid multicolinearityMulticollinearity is actually a concern for numerous designs like linear regression and for this reason requires to be dealt with accordingly.

In this section, we will discover some usual function design techniques. Sometimes, the attribute by itself may not provide valuable details. Imagine utilizing net use information. You will have YouTube individuals going as high as Giga Bytes while Facebook Messenger individuals make use of a pair of Huge Bytes.

One more concern is the usage of specific values. While categorical values are common in the information science globe, understand computers can just understand numbers.

Essential Preparation For Data Engineering Roles

At times, having as well many thin dimensions will certainly obstruct the performance of the version. A formula commonly used for dimensionality decrease is Principal Elements Evaluation or PCA.

The usual categories and their below categories are explained in this section. Filter techniques are typically utilized as a preprocessing step. The option of functions is independent of any type of machine discovering algorithms. Instead, features are selected on the basis of their scores in different analytical tests for their correlation with the outcome variable.

Usual approaches under this classification are Pearson's Connection, Linear Discriminant Evaluation, ANOVA and Chi-Square. In wrapper approaches, we attempt to utilize a part of functions and train a version utilizing them. Based on the reasonings that we draw from the previous model, we choose to add or get rid of attributes from your part.

Mock Data Science Interview

Common techniques under this category are Onward Option, In Reverse Removal and Recursive Attribute Removal. LASSO and RIDGE are typical ones. The regularizations are provided in the formulas below as referral: Lasso: Ridge: That being stated, it is to recognize the technicians behind LASSO and RIDGE for meetings.

Not being watched Learning is when the tags are not available. That being claimed,!!! This blunder is sufficient for the interviewer to terminate the meeting. An additional noob error individuals make is not stabilizing the features before running the version.

. Policy of Thumb. Linear and Logistic Regression are the many standard and commonly used Machine Knowing algorithms around. Before doing any kind of analysis One typical meeting mistake individuals make is beginning their analysis with a more complex model like Semantic network. No question, Neural Network is highly precise. Nevertheless, criteria are essential.

Latest Posts

How To Explain Machine Learning Algorithms In Interviews

The Best Python Courses For Data Science & Ai Interviews

Google Tech Dev Guide – Mastering Software Engineering Interview Prep